Sunday, May 31, 2015

Friday, May 29, 2015

Shutdown procedure of Isilon cluster via CLI

Below is the process of shutting down the EMC Isilon cluster via CLI

Step 1: Ensure there is no read/write operation on the cluster. You can do this by checking the smb/nfs sessions and ask the user to close the session:

# isi smb sessions list

# isi nfs nlm locks list

Step 2: Check the status of the client facing protocols running on the cluster and disable them:

# isi services apache2

# isi services isi_hdfs_d

# isi services isi_iscsi_d

# isi services ndmpd

# isi services nfs

# isi services smb

# isi services vsftpd

Now disable the services that are running

# isi services -a <service name> disable

Step 3: Flush the node journal to file system

# isi_for_array -s isi_flush

Note: Run the command once again if any node fails to flush the journal.

Step 4: Shutdown each on sequentially and don't run 'isi_for_array shutdown -p'

a) Connect to node and run command

# isi config

>> shutdown

Watch the console and ensure that the node is shutdown properly

Not: If you wish to shutdown all nodes simultaneously then run command:

# isi config

>>> shutdown all

Step 5: Once you power up the nodes, run the below command to check the status:

# isi status -q

Step 6: Enable all the services that you disabled in step 2

isi services apache2 enable

isi services isi_hdfs_d enable

isi services isi_iscsi_d enable

isi services ndmpd enable

isi services nfs enable

isi services smb enable

isi services vsftpd enable

Step 7: Verify client are able to connect to cluster and able to perform operation as usually.

Happy Learning!

Step 1: Ensure there is no read/write operation on the cluster. You can do this by checking the smb/nfs sessions and ask the user to close the session:

# isi smb sessions list

# isi nfs nlm locks list

Step 2: Check the status of the client facing protocols running on the cluster and disable them:

# isi services apache2

# isi services isi_hdfs_d

# isi services isi_iscsi_d

# isi services ndmpd

# isi services nfs

# isi services smb

# isi services vsftpd

Now disable the services that are running

# isi services -a <service name> disable

Step 3: Flush the node journal to file system

# isi_for_array -s isi_flush

Note: Run the command once again if any node fails to flush the journal.

Step 4: Shutdown each on sequentially and don't run 'isi_for_array shutdown -p'

a) Connect to node and run command

# isi config

>> shutdown

Watch the console and ensure that the node is shutdown properly

Not: If you wish to shutdown all nodes simultaneously then run command:

# isi config

>>> shutdown all

Step 5: Once you power up the nodes, run the below command to check the status:

# isi status -q

Step 6: Enable all the services that you disabled in step 2

isi services apache2 enable

isi services isi_hdfs_d enable

isi services isi_iscsi_d enable

isi services ndmpd enable

isi services nfs enable

isi services smb enable

isi services vsftpd enable

Step 7: Verify client are able to connect to cluster and able to perform operation as usually.

Happy Learning!

Sunday, May 10, 2015

XtremIO Theory of Operation

Below is the theory of operation for XtremIO. I'll try to cover as much ground as i can and while keeping things simple.

As we know XtremIO is an all flash array, based on scale-out architecture. The system's building block is X-Brick, which can be clustered together to grow the array.

Each X-Brick consist of:

a) 1 DAE (disk array enclosure); containing 25 SSDs, 2 PSUs, 2 SAS redundant module interconnect.

b) 1 Battery backup unit (BBU)

c) 2 Storage controllers. Each controller has 2 PSUs, 2 Eight Gb/s FC ports, Two 10GbE isci ports, Two 40 Gb/s Infiniband ports, One 1 Gb/s management port.

Note: If its a single brick cluster then you may need a additional BBU for redundancy.

The system operation is controlled via stand-alone dedicated Linux-based server called XtremIO Management Server (XMS). One XMS can manage only one cluster. Array continues operating if XMS is disconnected but can't be monitored or configured. XMS can be either be a virtual or physical server. It communicates with each storage controller in X-Brick via management LAN. XMS enables array administrator to manage array via GUI or CLI.

XIOS handles all activities in storage controller. There are 6 software modules that are responsible for all system functions.

1) I/O modules R, C & D that are used for routing, control and data placement throughout the array.

Routing Module: It translate all SCSI commands into internal XtremIO commands. It is also responsible for breaking incoming I/O into 4K chunk and calculate data hash value.

Control Module: This module contains the address to hash mapping table (A2H).

Data Module: This module contains Hash to Physical (H2P) SSD address mapping. It is also responsible for maintaining data protection (XDP).

2) Platform Module: Each storage controller has PM to manage the hardware and system process; it also restart system process if required. PM also clustering agent that manages the entire cluster.

3) Management Module: Each Storage Controller has one management module running on it; in a active/passive way. If one SC fails then MM starts on the other SC of X-Brick. If both SC fails then the system will halt.

Below picture depicts the module communication flow-chart:

As we know XtremIO is an all flash array, based on scale-out architecture. The system's building block is X-Brick, which can be clustered together to grow the array.

Each X-Brick consist of:

a) 1 DAE (disk array enclosure); containing 25 SSDs, 2 PSUs, 2 SAS redundant module interconnect.

b) 1 Battery backup unit (BBU)

c) 2 Storage controllers. Each controller has 2 PSUs, 2 Eight Gb/s FC ports, Two 10GbE isci ports, Two 40 Gb/s Infiniband ports, One 1 Gb/s management port.

Note: If its a single brick cluster then you may need a additional BBU for redundancy.

The system operation is controlled via stand-alone dedicated Linux-based server called XtremIO Management Server (XMS). One XMS can manage only one cluster. Array continues operating if XMS is disconnected but can't be monitored or configured. XMS can be either be a virtual or physical server. It communicates with each storage controller in X-Brick via management LAN. XMS enables array administrator to manage array via GUI or CLI.

XtremIO Operating System:

XtremIO runs on a Open Source Linux distribution as a base platform and on top which runs XIOS. OS manages the functional modules, RDMA functions over InfiniBand (IB) between all storage controllers in the cluster.XIOS handles all activities in storage controller. There are 6 software modules that are responsible for all system functions.

1) I/O modules R, C & D that are used for routing, control and data placement throughout the array.

Routing Module: It translate all SCSI commands into internal XtremIO commands. It is also responsible for breaking incoming I/O into 4K chunk and calculate data hash value.

Control Module: This module contains the address to hash mapping table (A2H).

Data Module: This module contains Hash to Physical (H2P) SSD address mapping. It is also responsible for maintaining data protection (XDP).

2) Platform Module: Each storage controller has PM to manage the hardware and system process; it also restart system process if required. PM also clustering agent that manages the entire cluster.

3) Management Module: Each Storage Controller has one management module running on it; in a active/passive way. If one SC fails then MM starts on the other SC of X-Brick. If both SC fails then the system will halt.

Below picture depicts the module communication flow-chart:

Write Operation:

When host writes a I/O to array.

1) It is picked up by R Module, which breaks it into 4K chunks and calculate the corresponding hash value.

2) C Module stores the hash value

3) D module updates the physical address information in its mapping table before storing it at physical SSD.

4) System sends the acknowledgement back to host.

Below picture depicts the normal Write I/O operation.

Write operation of duplicated I/O:

Read Operation:

Whenever a read I/O is issued to array:

1) System analyse each request to determine the LBA (logical block address) for each data block. This logical block address is stored C Module.

2) C module do a look up for Hash value stored in D module.

3) D module returns the relevant Physical SSD address to the Host.

Below picture depict the read operation:

Also read array-administration and xtremio-architecture

Happy Learning!

Saturday, May 9, 2015

XtremIO Array Administration

Finally writing my post on XtremIO array administration. It was due since long after i completed my blog on xtremio-architecture

So here we go... I'm covering basic details like - GUI overview, array administration and monitoring. I've tried covering few basic commands which can be used to check the system status using CLI. For details kindly check the array administration guide available at support.emc.com

So here we go... I'm covering basic details like - GUI overview, array administration and monitoring. I've tried covering few basic commands which can be used to check the system status using CLI. For details kindly check the array administration guide available at support.emc.com

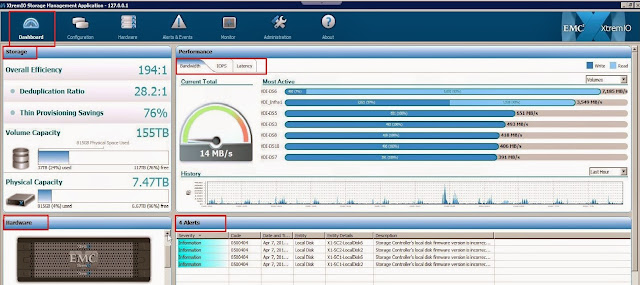

GUI Overview:

Below picture depict the array dashboard where you check the system status in a glance. It shows overview about capacity, alerts, performance and hardware status.

Hardware overview:

Below picture depict the hardware overview of the array and cable connectivity:

Front view:

Back View:

Cable Connectivity:

The different cable connections are illustrated between the brick parts and where the storage connections would fit in.

Hardware Failure:

You can see that the hardware failure is highlighted via change in color.

Storage Provisioning:

Below are the steps for storage provisioning on XtremIO array:

1) Create new volume. You can also create multiple volumes in a single step and club them in a single folder.

2) Create initiator group

3) Map the volumes created in Step 1 to the initiator group created in step 2. Just select the volumes and initiator group respectively and click on apply.

Array Alerts and Events:

XtremIO array has built in dashboard for the alert and event management. The reporting allows for events or alerts to be filter based on the severity, type and time frame. These are all good filter options and should allow admins to find the information they are looking for.

CLI Administration:

xmcli (admin)>

xmcli (admin)>

xmcli (admin)>

show-clusters

Cluster-Name Index

State Conn-State Num-of-Vols Vol-Size

Logical-Space-In-Use Space-In-Use UD-SSD-Space Total-Writes Total-Reads

Stop-Reason Size-and-Capacity

demo_sys4 1

active connected 15 155.006T 37.019T 815.314G 7.475T 633.341T 644.048T

none 1X10TB

xmcli (admin)>

xmcli (admin)>

show-clusters-performance

Cluster-Name Index

Write-BW(MB/s) Write-IOPS Read-BW(MB/s) Read-IOPS BW(MB/s) IOPS Total-Write-IOs

Total-Read-IOs

demo_sys4 1

6.236 1109 8.032 1241 14.269

2350 11394017006

4136936530

xmcli (admin)>

show-targets-performance

Name Index Write-BW(MB/s) Write-IOPS

Read-BW(MB/s) Read-IOPS BW(MB/s) IOPS Total-Write-IOs Total-Read-IOs

X1-SC1-fc1 1

1.582 279 2.077 312 3.659

591 2985113428 1099644445

X1-SC1-fc2 2

1.432 245 1.793 314 3.225

559 2757886971 1002224794

X1-SC1-iscsi1 5 0.000 0 0.000 0 0.000 0

0 0

X1-SC1-iscsi2 6 0.000 0 0.000 0 0.000 0

0 0

X1-SC2-fc1 11

1.674 310 2.165 308 3.839

618 3174570251 1191688338

X1-SC2-fc2 12

1.548 274 1.994 305 3.542

579 2612228199 979161273

X1-SC2-iscsi1

15 0.000 0 0.000 0 0.000 0

0 0

X1-SC2-iscsi2

16 0.000 0 0.000 0 0.000 0

0 0

xmcli (admin)>

show-storage-controllers

Storage-Controller-Name

Index Mgr-Addr IB-Addr-1 IB-Addr-2

IPMI-Addr Brick-Name Index Cluster-Name

Index State Unknown-Prop Enabled-State

Unorderly-Stop-Reason Conn-State IPMI-State Unknown-Prop

X1-SC1 1 10.10.169.50 169.254.0.1 169.254.0.2

10.10.169.53 X1 1 demo_sys4 1

healthy healthy enabled none connected connected

on

X1-SC2 2 10.10.169.51 169.254.0.17 169.254.0.18

10.10.169.54 X1 1 demo_sys4 1

healthy healthy enabled none connected connected

on

xmcli (admin)>

show-datetime

Mode NTP-Servers

Cluster-Time Cluster-Time-Zone

xmcli (admin)>

show-data-protection-groups

Name Index State

Useful-SSD-Space UD-SSD-Space UD-SSD-Space-In-Use Rebuild-Progress

Preparation-Progress Rebalance-Progress Rebuild-Prevention Brick-Name Index

Cluster-Name Index

X1-DPG 1 normal 9.095T 7.475T 815.314G 0 0 0 none X1 1

demo_sys4 1

xmcli (admin)>

show-xms

Name Index

Xms-IP-Addr Xms-Mgmt-Ifc

xms 1

10.10.169.52 eth0

xmcli (admin)>

show-storage-controllers-infiniband-ports

Name Index Port-Index Peer-Type

Port-In-Peer-Index Link-Rate-In-Gbps Port-State Storage-Controller-Name Index

Brick-Name Index Cluster-Name Index

X1-SC1-IB1 1 1

None 0 0 up X1-SC1 1 X1

1 demo_sys4 1

X1-SC1-IB2 2 2

None 0 0 up X1-SC1 1

X1 1 demo_sys4 1

X1-SC2-IB1 3 1

None 0 0 up X1-SC2 2 X1

1 demo_sys4 1

X1-SC2-IB2 4 2

None 0 0 up X1-SC2 2 X1

1 demo_sys4 1

xmcli (admin)>

xmcli (admin)>

show-storage-controllers-infiniband-counters

Storage-Controller-Name

Index Port-Index Symb-Errs Symb-Errs-pm Recovers Recovers-pm Lnk-Downed

Lnk-Downed-pm Rcv-Errs Rcv-Errs-pm Rmt-Phys-Errs Rmt-Phys-Errs-pm Integ-Errs

Integ-Errs-pm Rate-Gbps Rcv-Errs-pl Recovers-pl Lnk-Downed-pl Symb-Errs-pl

Intg-Errs-pl Rmt-Phys-Errs-pm

X1-SC1 1 1

X1-SC1 1 2

X1-SC2 2 1

X1-SC2 2 2

xmcli (admin)>

show-infiniband-switches-ports

Port-Index Peer-Type

Port-In-Peer-Index Link-Rate-In-Gbps Port-State IBSwitch-Name IBSwitch-Index

Cluster-Name Index

xmcli (admin)>

show-infiniband-switches

Name Index

Serial-Number Index-In-Cluster State FW-Version Part-Number Cluster-Name Index

xmcli

(admin)> show-daes-controllers

Name Index Serial-Number State

Index-In-DAE Location FW-Version Part-Number DAE-Name DAE-Index Brick-Name Index

Cluster-Name Index

X1-DAE-LCC-A 1 US1D0121500226 healthy 1 bottom 149

303-104-000E X1-DAE 1 X1 1

demo_sys4 1

X1-DAE-LCC-B 2 US1D0121500700 healthy 2 top 149

303-104-000E X1-DAE 1 X1 1

demo_sys4 1

xmcli (admin)>

show-daes-psus

Name Index Serial-Number Location-Index Power-Feed State Input Location HW-Revision Part-Number

DAE-Name DAE-Index Brick-Name Index Cluster-Name Index

X1-DAE-PSU1 1 AC7B0121702789 1 feed_a

healthy on left 2a10

071-000-541 X1-DAE 1 X1 1

demo_sys4 1

X1-DAE-PSU2 2 AC7B0121702790 2 feed_b healthy on right

2a10 071-000-541

X1-DAE 1 X1 1 demo_sys4

1

xmcli (admin)>

show-daes

Name Index Serial-Number State

FW-Version Part-Number Brick-Name Index Cluster-Name Index

X1-DAE 1 US1D1122100068 healthy 149 100-562-964 X1 1

demo_sys4 1

xmcli (admin)>

xmcli (admin)> add-volume alignment-offset=0 lb-size=512 vol-name="LDN_VDI_00" vol-size="20g" parent-folder-id="/"

Added Volume LDN_VDI_00 [16]

xmcli (admin)>

xmcli (admin)> show-volumes

Volume-Name Index Vol-Size LB-Size VSG-Space-In-Use Offset Ancestor-Name Index VSG-Index Cluster-Name Index Parent-Folder-Name Index Unknown-Prop Unknown-Prop Unknown-Prop Total-Writes Total-Reads Certainty-State

HA_Heartbeats01 1 2G 512 17.355M 0 1 demo_sys4 1 / 1 enabled enabled enabled 10.793G 77.218G ok

HA_Heartbeats02 2 2G 512 17.629M 0 2 demo_sys4 1 / 1 enabled enabled enabled 10.575G 77.211G ok

LDN_VDI_00 16 20G 512 0 0 16 demo_sys4 1 / 1 enabled enabled enabled 0 0 ok

LUN_01 14 1G 512 0 0 14 demo_sys4 1 Host_DBA 2 disabled disabled disabled 0 0 ok

LUN_02 15 1G 512 0 0 15 demo_sys4 1 Host_DBA 2 disabled disabled disabled 0 0 ok

VDI-DS1 4 15T 512 3.604T 0 4 demo_sys4 1 / 1 disabled disabled disabled 9.974T 739.662G ok

VDI-DS2 5 15T 512 3.589T 0 5 demo_sys4 1 / 1 disabled disabled disabled 9.935T 722.896G ok

VDI-DS3 6 15T 512 3.604T 0 6 demo_sys4 1 / 1 disabled disabled disabled 9.975T 706.256G ok

VDI-DS4 7 15T 512 3.604T 0 7 demo_sys4 1 / 1 disabled disabled disabled 9.975T 690.904G ok

VDI-DS5 8 15T 512 3.604T 0 8 demo_sys4 1 / 1 disabled disabled disabled 9.975T 673.812G ok

VDI-DS6 9 15T 512 3.604T 0 9 demo_sys4 1 / 1 disabled disabled disabled 9.975T 657.464G ok

VDI-DS7 10 15T 512 3.604T 0 10 demo_sys4 1 / 1 disabled disabled disabled 9.976T 642.827G ok

VDI-DS8 11 15T 512 3.618T 0 11 demo_sys4 1 / 1 disabled disabled disabled 10.014T 628.075G ok

VDI-DS9 12 15T 512 3.618T 0 12 demo_sys4 1 / 1 disabled disabled disabled 10.013T 608.400G ok

VDI-DS10 13 15T 512 3.618T 0 13 demo_sys4 1 / 1 disabled disabled disabled 10.014T 592.012G ok

VDI_Infra1 3 5T 512 977.266G 0 3 demo_sys4 1 / 1 disabled disabled disabled 3.474T 199.027T ok

xmcli (admin)>

xmcli (admin)> show-volume vol-id="LDN_VDI_00"

Volume-Name Index Vol-Size LB-Size VSG-Space-In-Use Offset Ancestor-Name Index VSG-Index Cluster-Name Index Parent-Folder-Name Index Unknown-Prop Unknown-Prop Unknown-Prop Total-Writes Total-Reads NAA-Identifier Certainty-State

LDN_VDI_00 16 20G 512 0 0 16 demo_sys4 1 / 1 enabled enabled enabled 0 0 860f8099b3e34ab3 ok

xmcli (admin)>

xmcli (admin)> show-initiator-groups

IG-Name Index Parent-Folder-Name Index Certainty-State

esx_scvdi01 1 /VDI_Cluster 2 ok

esx_scvdi02 2 /VDI_Cluster 2 ok

esx_scvdi03 3 /VDI_Cluster 2 ok

esx_scvdi04 7 /VDI_Cluster 2 ok

esx_scvdi05 5 /VDI_Cluster 2 ok

esx_scvdi06 6 /VDI_Cluster 2 ok

esx_scvdi07 4 /VDI_Cluster 2 ok

esx_scvdi08 8 /VDI_Cluster 2 ok

esx_scvdi09 9 /VDI_Cluster 2 ok

esx_scvdi10 10 /VDI_Cluster 2 ok

esx_scvdi11 11 /VDI_Cluster 2 ok

esx_scvdi12 12 /VDI_Cluster 2 ok

esx_scvdi13 13 /VDI_Cluster 2 ok

esx_scvdi14 14 /VDI_Cluster 2 ok

esx_scvdi15 15 /VDI_Cluster 2 ok

esx_scvdi16 16 /VDI_Cluster 2 ok

esx_scvdi17 17 /VDI_Cluster 2 ok

esx_scvdi18 18 /VDI_Cluster 2 ok

esx_scvdi19 19 /VDI_Cluster 2 ok

esx_scvdi20 20 /VDI_Cluster 2 ok

esx_scvdi21 21 /VDI_Cluster 2 ok

esx_scvdi22 22 /VDI_Cluster 2 ok

esx_scvdi23 23 /VDI_Cluster 2 ok

esx_scvdi24 24 /VDI_Cluster 2 ok

esx_scvdi25 25 /VDI_Cluster 2 ok

esx_scvdi26 26 /VDI_Cluster 2 ok

xmcli (admin)>

Subscribe to:

Comments (Atom)